EasyDiagram: Next Steps#

Here I define a multistage strategy for improving the EasyDiagrams’s performance and functionality while maintaining an optimal performance-to-cost balance and allowing for organic growth. Establishing a refactoring framework/guideline with clearly defined steps is crucial for the project’s long-term success. So let’s dive in!

Analysis#

Access Patterns#

There are several different types of traffic that the system will have to handle:

Image Viewing traffic, including the built-in editor view, is the most common type of traffic in the system and easily exceeds hundreds of RPS.

Image Rendering traffic is another common type of traffic that a user will generate frequently after creating a new diagram, measured in tens of requests per diagram. This is also the slowest part of the traffic, as image rendering takes approximately 1.2 seconds.

Diagram Management traffic includes listing, creation, deletion, access management, and renaming diagrams. It’s a common access pattern, but still not one that generates a lot of traffic.

Landing Page traffic is not very common, as the landing page is visited only by newcomers or users wanting to create a diagram.

Static Resource (non-diagram related) traffic consists of resources that are usually kept on a CDN.

Registration and Authentication traffic is the least common type of traffic to the system.

The traffic generated by registration, authentication, and diagram management activities is relatively low, and the initial MVP provides sufficient performance. Therefore, there likely won’t be a need to worry about it until the system grows to hundreds of users and millions of diagrams.

Image Rendering is another story, as the initial MVP can handle approximately 3 RPS at most, which significantly limits the number of users working at the same time.

Since diagrams can be made public, Image Viewing traffic does not directly correlate with the number of active users. The initial MVP can handle 3K RPS, which is quite good for a demo project, but for production, it may be too low given the nature of the traffic.

Roadmap#

Given the access patterns and benchmark results, the diagram rendering view is the first area that requires significant improvement. The next areas to address are the image and built-in editor views, as they are the most common access patterns. Consequently, the roadmap focuses primarily on these areas while also incorporating some functional improvements. Broader performance optimizations will address the rest of the logic.

The most effective approach to implementing these improvements is as follows:

Introduce image versioning

Make rendering asynchronous with horizontally scalable renderers

Implement an effective caching strategy

Extend the data model and support database sharing

Implement performance-critical parts in Rust (for cases where Python becomes a bottleneck)

Move static assets to CDN

The roadmap places high-priority items at the top, with each step enabling the subsequent one. Low-priority items remain at the bottom. While performance optimization and horizontal scaling are top priorities, the plan also aims to keep scaling to a minimum.

Step 1. Image Versioning#

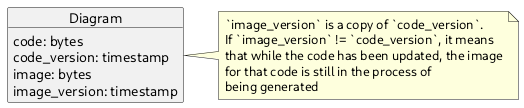

Image versioning is important because it enables asynchronous rendering and effective caching. The approach involves adding two version fields, code_version and image_version, to track changes in the code and the image:

code_version is generated immediately after the code is updated and can be returned to the user. This version is a unique, incrementing value (for instance, a timestamp combined with a random suffix).

image_version is set to the code_version of the code from which the image was rendered.

If image_version does not match code_version, it indicates that the image is outdated and should be regenerated, or that the code is invalid.

Step 2. Async Image Rendering#

Context: PlantUML requires about 0.5 to 1.5 seconds to render an image. Making a UI request wait that long for a rendered image is detrimental to scalability because it blocks the server and prevents it from handling other requests.

Goal: Implement a more efficient way of dealing with image rendering requests.

Solution: By delegating image rendering to an asynchronous task, the server can immediately respond with HTTP 204 and free itself to handle other requests, while background workers can be scaled out to accommodate the rendering load.

To obtain a new rendered image, the UI uses long polling until the image_version matches the code_version. Long polling is straightforward to implement and doesn’t require additional infrastructure, but a backoff safeguard is necessary to mitigate the possibility of DoS-ing the service. Eventually, long polling can be replaced with WebSockets or server push notifications for more efficient updates.

To communicate with workers, the server uses a queue table in the same database that stores

the diagram data. This design choice follows the transactional outbox pattern to

guarantee atomicity and consistency. Additionally, due to the nature of image rendering,

only the most recent message matters—something that is not trivial to implement with typical

messaging brokers like RabbitMQ or SQS. However, PostgreSQL naturally supports this via

INSERT INTO … ON CONFLICT (id) DO UPDATE syntax. Since the database is already

provisioned, this approach also avoids introducing any additional infrastructure overhead.

The downside of this approach is that managing the queue table is now the responsibility of

the application logic. However, there are many frameworks that provide the required

functionality. Dramatiq is especially well-suited because it supports PostgreSQL as a

task broker. By customizing the relevant parts of Dramatiq, you can insert or update tasks

based on the diagram_id, ensuring that each diagram has at most one associated task.

Step 3. Caching#

Goal: Reduce database load.

Context: Diagrams have two access patterns:

Editing: Frequent changes during the active editing phase.

Viewing: After the initial editing phase, diagrams remain static, and users only view them.

The second pattern accounts for the majority of diagram endpoint traffic. It doesn’t make sense to query the database repeatedly for content that no longer changes, making caching especially beneficial. However, diagrams in the editing phase require quick propagation of changes (within minutes). Simply caching database query results for a few minutes helps alleviate hot spots but won’t greatly impact most diagrams, which might be viewed several times per day over a few weeks. For meaningful performance benefits, longer caching is necessary.

Solution: A more advanced caching strategy is needed to accommodate both editing (frequent changes) and viewing (static content). A local shared long-term cache with an eviction strategy that monitors changes alongside passing image versions for editorial workflow solves both requirements.

Local cache on each instance avoids additional infrastructure and reduces maintenance costs.

Scales with the number of instances.

Leveraging tools like diskcache for large, long-term storage that doesn’t require significant RAM is the best option for a local cache, especially as diskcache supports sharing the cache among multiple processes and can offer performance similar to or better than Memcache or Redis.

Query Workflow for the Image Endpoint#

Check if the requested diagram is in the cache.

If not, query the database.

The URL for viewing an image is /{id}/image.png — this is the URL that is embedded

in external services. For editing, the editor explicitly provides the image version:

/{id}/image.png?v=123. This versioning ensures the cache always returns an image

version that is equal to or newer than what the editor requested. Consequently, the editor

sees the latest version instead of any stale cached content.

While an editor can see the updates right away, additional logic is needed to promote changes to other users. This is where the eviction process comes in: every two minutes, each instance runs a process that retrieves a list of images changed since the last run and evicts them from the cache. The cache, by default, has an eviction timeout of 24 hours, so even if the eviction process fails, the record won’t stay in the cache for more than a day.

The eviction process uses long polling on the database, which does create some additional

load, but it’s much simpler and more reliable than using pub-sub, and it does not require

any additional infrastructure. The amount of data that the eviction process pulls is limited

to the IDs, so even in the case of tens of thousands of IDs, it should still perform well.

However, the image_version field must be indexed to support range queries. Additionally,

if the eviction process hasn’t run for more than three hours, it must evict all records in

the local cache without making any DB queries.

Diskcache performance (from the project’s benchmarks):

In [1]: import pylibmc

In [2]: client = pylibmc.Client(['127.0.0.1'], binary=True)

In [3]: client[b'key'] = b'value'

In [4]: %timeit client[b'key']

10000 loops, best of 3: 25.4 µs per loop

In [5]: import diskcache as dc

In [6]: cache = dc.Cache('tmp')

In [7]: cache[b'key'] = b'value'

In [8]: %timeit cache[b'key']

100000 loops, best of 3: 11.8 µs per loop

Step 4. Extending Model and Database Sharding#

Goal: Extend the existing model and provide a basis for effective DB sharding.

Context: The MVP’s initial model provides only the most basic feature and does not support much of the functionality users usually want, such as organizing diagrams in folders or sharing diagram ownership.

Solution: A diagram is UML code plus a rendered image that can be displayed. A user can create and update diagrams. A diagram belongs to a single account, and each account always has exactly one owner. A user can own multiple accounts. In addition to the owner, an account can include multiple users who can access its diagrams, and a user can be a part of many accounts. A diagram can be placed in a folder, and a folder can be placed in another folder. A folder or diagram can belong to only one folder at most. A user can make a diagram publicly accessible.

Extend the Existing Model for Effective Database Sharding#

The MVP’s initial model offers only the most basic features and does not support many of the functionalities users typically expect, such as organizing diagrams in folders or sharing diagram ownership.

The extended model must support the following use cases:

A diagram consists of UML code and a rendered image that can be displayed.

A user can create and update diagrams.

A diagram belongs to a single account, and each account always has exactly one owner.

A user can own multiple accounts.

In addition to the owner, an account can include multiple users who can access its diagrams; similarly, a user can belong to multiple accounts.

A diagram can be placed in a folder, and folders can be nested within other folders.

A folder or diagram can belong to only one folder at a time.

A user can make a diagram publicly accessible.

SQL ERD for the new model:

In the new model, sharding is done by account, with minimal denormalization of the user model. The constraint changes from requiring a globally unique user email to ensuring that each email is unique within its account. This provides an effective solution for both supporting users belonging to multiple accounts and enabling sharding.